This article discusses the differences between Web2 and Web3 MMORPG games. While both offer massively multiplayer online role-playing game experiences, their underlying technologies and player ownership models differ significantly.

Web2 MMORPGs, the established model, are typically centralized. Game data resides on servers controlled by the game developers, who also own and manage all in-game assets. Players rent access to the game world and its features, with limited control over their in-game possessions. The developers retain full authority over game updates, monetization, and overall game direction. Examples include World of Warcraft and Final Fantasy XIV.

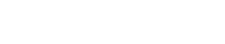

Web3 MMORPGs, on the other hand, leverage blockchain technology to decentralize game ownership and control. In-game assets are often represented as non-fungible tokens (NFTs), granting players true ownership and the ability to trade or sell these assets. Game data might be distributed across a network, potentially making the game more resilient to censorship and single points of failure. The community often plays a larger role in shaping the game's development through decentralized autonomous organizations (DAOs). However, Web3 MMORPGs are still a relatively nascent field, and many face challenges related to scalability, user experience, and regulatory uncertainty.

The choice between a Web2 and Web3 MMORPG depends on individual priorities. Players seeking a polished, established experience with a clear development roadmap might prefer a Web2 game. Those prioritizing player ownership, community governance, and the potential for long-term asset value might be drawn to a Web3 MMORPG, despite the inherent risks and potential for volatility. The landscape of MMORPGs is evolving rapidly, and both Web2 and Web3 models will likely continue to coexist and adapt.

Tags : Adventure